ChatGPT Data Security Concerns

Artificial intelligence is rapidly evolving, and ChatGPT has emerged as a leading technology, providing users with its conversational capabilities. However, like any AI platform, data security concerns are inevitable and require thorough scrutiny. This article explores the multifaceted issue of data security within ChatGPT, emphasising the crucial importance of protecting user information in the digital age.

Understanding ChatGPT's Data Use

ChatGPT, developed by OpenAI, uses vast datasets to train its language models, ensuring responses are not only relevant but also contextually aware. It processes user inputs to generate conversational outputs, raising questions about what data is collected and how it is used. Users contribute data that can range from mundane to sensitive; hence, understanding the nature of this data collection is crucial for informed usage.

Beyond formal data inputs, there's an emerging concern about indirect data acquisition methods, such as scraping social media or other online platforms. This raises questions about the extent to which AI systems unknowingly absorb data from the public domain.

Data Security Concerns

The backbone of ChatGPT's and other LLMs' concerns revolves around potential risks such as unauthorised data access, leaks, or misuse. While no significant breaches have been reported to date, the hypothetical scenario of such events poses a significant threat, emphasising the need for robust security measures.

Companies like Cloudflare are attempting to develop firewalls that prevent automated bots from scraping and learning from website content. However, these solutions face challenges as they cannot wholly prevent custom-made bots from breaching data, illustrating the arms race in digital security measures.

Addressing ChatGPT's Security Vulnerabilities

Given the sensitive nature of user data, implementing robust security measures is imperative for ChatGPT integration. A comprehensive ChatGPT risk assessment should be conducted to identify potential vulnerabilities and mitigate them effectively. This includes encryption protocols, access controls, and regular security audits to ensure compliance with data protection regulations.

User Data Privacy

OpenAI has outlined policies to safeguard user data privacy, stressing transparency and control. Users are encouraged to review these policies to understand how their data is handled, retained, and potentially removed. The real challenge lies in balancing data utility for AI improvement with stringent privacy protections.

The general public's awareness and understanding of data privacy is a significant issue. Many users, especially those not technically inclined, may need to realise that AI platforms can harvest their social media posts and interactions. This risks unintentional disclosure of personal information and can lead to the unintended spread of business secrets or sensitive data.

The issue lies in the fact that with the burgeoning number of competitors to OpenAI, there is no certainty that all adhere to uniform regulations or established rules or consistently uphold ethical standards.

Impact of International Data Protection Laws

The data protection landscape is not the same globally - it varies significantly across jurisdictions. Laws such as the European Union's General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) in the United States set precedents for the security and user consent levels needed before data processing. These international laws impact how AI platforms like ChatGPT collect, store, and utilise data. They also underscore global compliance's complexity, presenting challenges and opportunities for enhancing user trust and data security.

However, many companies and organisations are concerned that such limitations and regulations might impair competitiveness. They argue that stringent data protection laws can stifle innovation and put them at a disadvantage compared to competitors who may not be bound by similar rules, particularly in regions with less stringent data privacy requirements. This creates a complex dynamic where the drive for business excellence and market leadership must be balanced with legal compliance and ethical data management practices.

The international patchwork of data protection laws underscores global compliance's complexity, presenting challenges and opportunities for enhancing user trust and data security while maintaining competitive agility.

Moreover, the industry needs to grapple with a situation unanticipated by many: the rapid proliferation of AI services like ChatGPT has outpaced regulatory development. This has led to a reactive rather than proactive regulatory environment, where laws and guidelines are being established after significant market growth has already occurred. The consensus is that these regulations and standards should have been developed years ago, laying the groundwork for a safer and more controlled expansion of AI services rather than playing catch-up in an already bustling marketplace.

Data Accuracy and AI Content Cycles

A burgeoning issue within AI systems is the reliance on data that may itself be generated by other AI models. This creates a feedback loop where the original inaccuracies or biases get amplified over time, compounded by the lack of human moderation or fact-checking. As AI-generated content becomes more prevalent, distinguishing between human-created and AI-created content becomes harder, raising concerns about data integrity and the propagation of misinformation. Ensuring data accuracy and implementing robust moderation systems are essential to mitigate these risks and maintain the integrity of AI platforms.

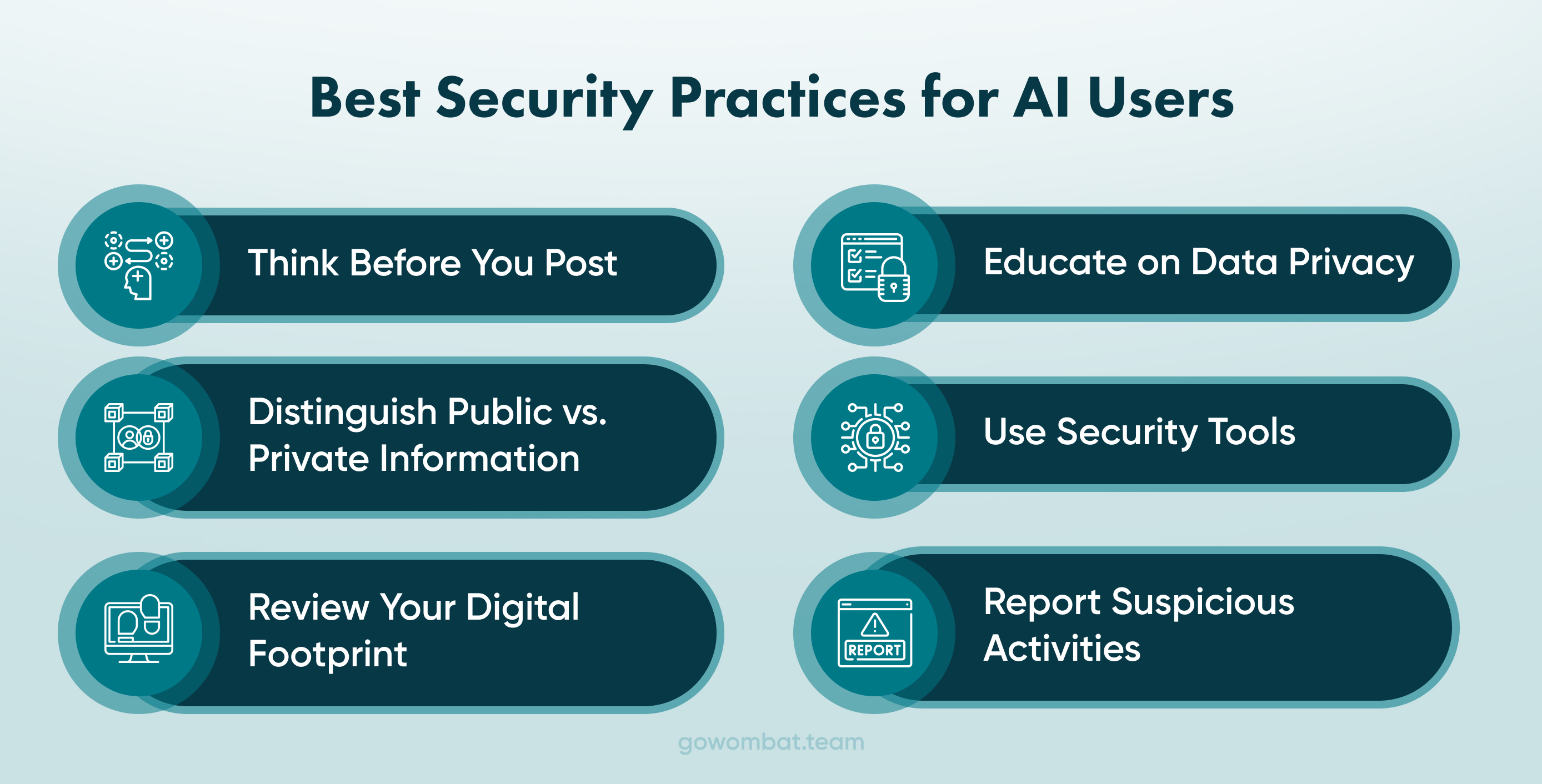

Best Security Practices for AI Users

Users play a critical role in maintaining their data security. Employing best practices such as avoiding sharing sensitive information, being cautious of phishing attempts, and understanding privacy settings can significantly mitigate risks. Awareness and proactive measures are fundamental to a safe user experience.

In the digital age, awareness and caution are paramount. Each user and company should operate under the assumption that any message or media posted online could be utilised by others, including for the training of AI models. This reality underscores the importance of mindfulness in online interactions.

Here are critical practices to consider:

- Consider your messages or media's content and potential reach before hitting the SEND button. Once information is online, it can be difficult, if not impossible, to retract it fully.

- Distinguish between what is meant for public consumption and what should remain private. Adjust privacy settings on social media platforms accordingly, and be mindful of where and how you share sensitive information.

- Review your online presence and the information you have shared on different platforms. This can help you understand what data is available about you and may influence your future sharing decisions.

- Educate Yourself and Others: Stay informed about data privacy, the capabilities of AI technologies, and the implications of sharing data online. Share this knowledge with peers, employees, and family members to enhance digital security collectively.

- Protect your personal information by using available security features and tools provided by platforms. This includes using strong, unique passwords and enabling two-factor authentication where possible.

- If you encounter suspicious activities or believe your data is being misused, report it to the relevant platforms or authorities. Awareness and action can prevent further misuse.

By adopting these practices, users can contribute to a safer digital environment while protecting their own and others' data from unintended use, including in training AI models.

Unlock Success with Premium Software Development

Contact us

Conclusion

As we move forward, the interplay between evolving data protection laws, technological advancements, and AI's expanding capabilities will continue to shape the landscape of digital security and user privacy. Striking a balance between innovation and regulation while ensuring the fidelity of AI models' data remains a critical challenge for developers and policymakers alike.

As ChatGPT and its multiple competitors continue to shape the future of conversational AI, addressing data security concerns remains a top priority. Balancing the scales between innovation and user protection is an ongoing challenge, but a safer digital interaction space can be cultivated with collective vigilance and responsible practices. Awareness, education, and transparency are the keystones in navigating the complex yet promising domain of AI data security.

Explore how Go Wombat implements cutting-edge security measures in AI integration to safeguard user data and privacy. Contact us to learn more about our secure ChatGPT integration solutions.

How can we help you ?